Why Reliance on AI for Writing Jobs Is a Fatal Mistake

Not a Substitute for Professional Medical Guidance, AI

ChatGPT—an artificial intelligence (AI) chatbot developed by OpenAI—has become an all-in-one tool for answering many simple and difficult questions, including requests for medical advice. It can answer medical questions and ace the United States Medical Licensing Exam (USMLE), but it cannot replace an appointment with a doctor at the hospital.

MUO VIDEO OF THE DAY

SCROLL TO CONTINUE WITH CONTENT

As ChatGPT advances, it has the potential to transform the healthcare sector completely. When integrated with health systems, it may improve patient access to care and boost doctors’ work efficiency. However, because it is AI-based, several concerns about its potential dangers exist.

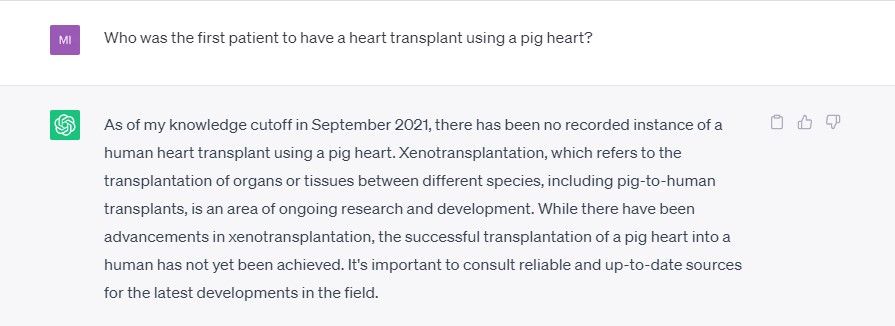

1. ChatGPT Has Limited Knowledge

ChatGPT does not know everything. According to OpenAI, ChatGPT has limited knowledge, particularly when it comes to what happened after September 2021.

ChatGPT does not have direct access to search engines or the internet. It was trained using a vast amount of text data from numerous sources, including books, websites, and other texts. It does not “know” the data it is delivering. Instead, ChatGPT uses text it has read to create predictions about the words to use and in what order.

Therefore, it cannot get current news on developments in medical fields. Yes, ChatGPT is unaware of the pig-to-human heart transplant or any other very recent breakthroughs in medical science.

2. ChatGPT May Produce Incorrect Information

ChatGPT can answer the questions you ask, but the responses can be inaccurate or biased. According to a PLoS Digital Health study, ChatGPT performed with at least 50% accuracy across all USMLE examinations. And while it exceeded the 60% passing threshold in some aspects, there is still the possibility of error.

Furthermore, not all of the information used to train ChatGPT is authentic. Responses based on unverified or potentially biased information may be incorrect or outdated. In the world of medicine, inaccurate information can even cost a life.

Because ChatGPT cannot independently research or verify material, it cannot differentiate between fact and fiction. Respected medical journals, including the Journal of the American Medical Association (JAMA), have established strict regulations that only humans can write scientific studies published in the journal. As a result, you should constantly fact-check ChatGPT’s responses .

3. ChatGPT Does Not Physically Examine You

Medical diagnoses are not solely dependent on symptoms. Physicians can gain insights into the pattern and severity of an illness through a patient’s physical examination. In order to diagnose patients, doctors today use both medical technologies and the five senses.

ChatGPT cannot perform a complete virtual checkup or even a physical examination; it can only reply to the symptoms you provide as messages. For a patient’s safety and care, errors in physical examination—or completely ignoring physical examination—can be harmful. Because ChatGPT didn’t physically examine you, it will offer an incorrect diagnosis.

DEX 3 RE is Easy-To-Use DJ Mixing Software for MAC and Windows Designed for Today’s Versatile DJ.

DEX 3 RE is Easy-To-Use DJ Mixing Software for MAC and Windows Designed for Today’s Versatile DJ.

Mix from your own library of music, iTunes or use the Pulselocker subsciprtion service for in-app access to over 44 million songs. Use with over 85 supported DJ controllers or mix with a keyboard and mouse.

DEX 3 RE is everything you need without the clutter - the perfect 2-deck mixing software solution for mobile DJs or hard-core hobbiests.

PCDJ DEX 3 RE (DJ Software for Win & MAC - Product Activation For 3 Machines)

4. ChatGPT Can Provide False Information

A recent study by the University of Maryland School of Medicine on ChatGPT’s advice for breast cancer screening found the following results:

“We’ve seen in our experience that ChatGPT sometimes makes up fake journal articles or health consortiums to support its claims.” —Paul Yi M.D., Assistant Professor of Diagnostic Radiology and Nuclear Medicine at UMSOM

As part of our testing of ChatGPT, we requested a list of non-fiction books that cover the subject of the subconscious mind. As a result, ChatGPT produced a fake book titled “The Power of the Unconscious Mind” by Dr. Gustav Kuhn.

When we inquired about the book, it replied that it was a “hypothetical” book that it created. ChatGPT won’t tell you if a journal article or book is false if you don’t inquire further.

5. ChatGPT Is Just an AI Language Model

Language models function by memorizing and generalizing text rather than examining or studying a patient’s condition. Despite generating responses that match human standards in terms of language and grammar, ChatGPT still contains a number of problems , much like other AI bots.

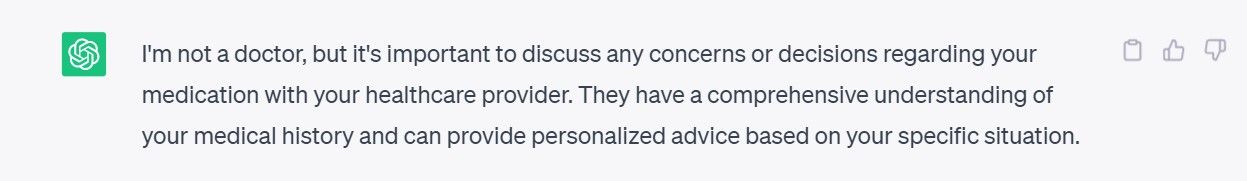

ChatGPT Is Not a Replacement for Your Doctor

Human doctors will always be needed to make the final call on healthcare decisions. ChatGPT usually advises speaking with a licensed healthcare practitioner when you ask for medical advice.

Artificial intelligence-powered tools like ChatGPT can be used to schedule doctor’s appointments, assist patients in receiving treatments, and maintain their health information. But it cannot take the place of a doctor’s expertise and empathy.

You shouldn’t rely on an AI-based tool to diagnose or treat your health, whether it be physical or mental.

SCROLL TO CONTINUE WITH CONTENT

As ChatGPT advances, it has the potential to transform the healthcare sector completely. When integrated with health systems, it may improve patient access to care and boost doctors’ work efficiency. However, because it is AI-based, several concerns about its potential dangers exist.

- Title: Why Reliance on AI for Writing Jobs Is a Fatal Mistake

- Author: Jeffrey

- Created at : 2024-08-16 11:34:38

- Updated at : 2024-08-17 11:34:38

- Link: https://tech-haven.techidaily.com/why-reliance-on-ai-for-writing-jobs-is-a-fatal-mistake/

- License: This work is licensed under CC BY-NC-SA 4.0.