Why Can't You Break Out? 7 Reasons Behind the Security Fortitude Against AI Jailbreaking

Why AI Shouldn’t Be Your Only Source for Emotional Counseling – Learn About These 9 Reasons

With the high costs of psychotherapy, it’s understandable why some patients consider consulting AI for mental health advice. Generative AI tools can mimic talk therapy. You just have to structure your prompts clearly and provide context about yourself.

AI answers general questions about mental health, but using it for therapy could do more harm than good. You should still seek professional help. Here are the dangers of asking generative AI tools like ChatGPT and Bing Chat to provide free therapy.

1. Data Biases Produce Harmful Information

AI is inherently amoral. Systems pull information from their datasets and produce formulaic responses to input—they merely follow instructions. Despite this neutrality,AI biases still exist. Poor training, limited datasets, and unsophisticated language models make chatbots present unverified, stereotypical responses.

All generative AI tools are susceptible to biases. Even ChatGPT, one of the most widely known chatbots, occasionally produces harmful output. Double-check anything that AI says.

When it comes to mental health treatment, avoid disreputable sources altogether. Managing mental conditions can already be challenging. Having to fact-check advice puts you under unnecessary stress. Instead, focus on your recovery.

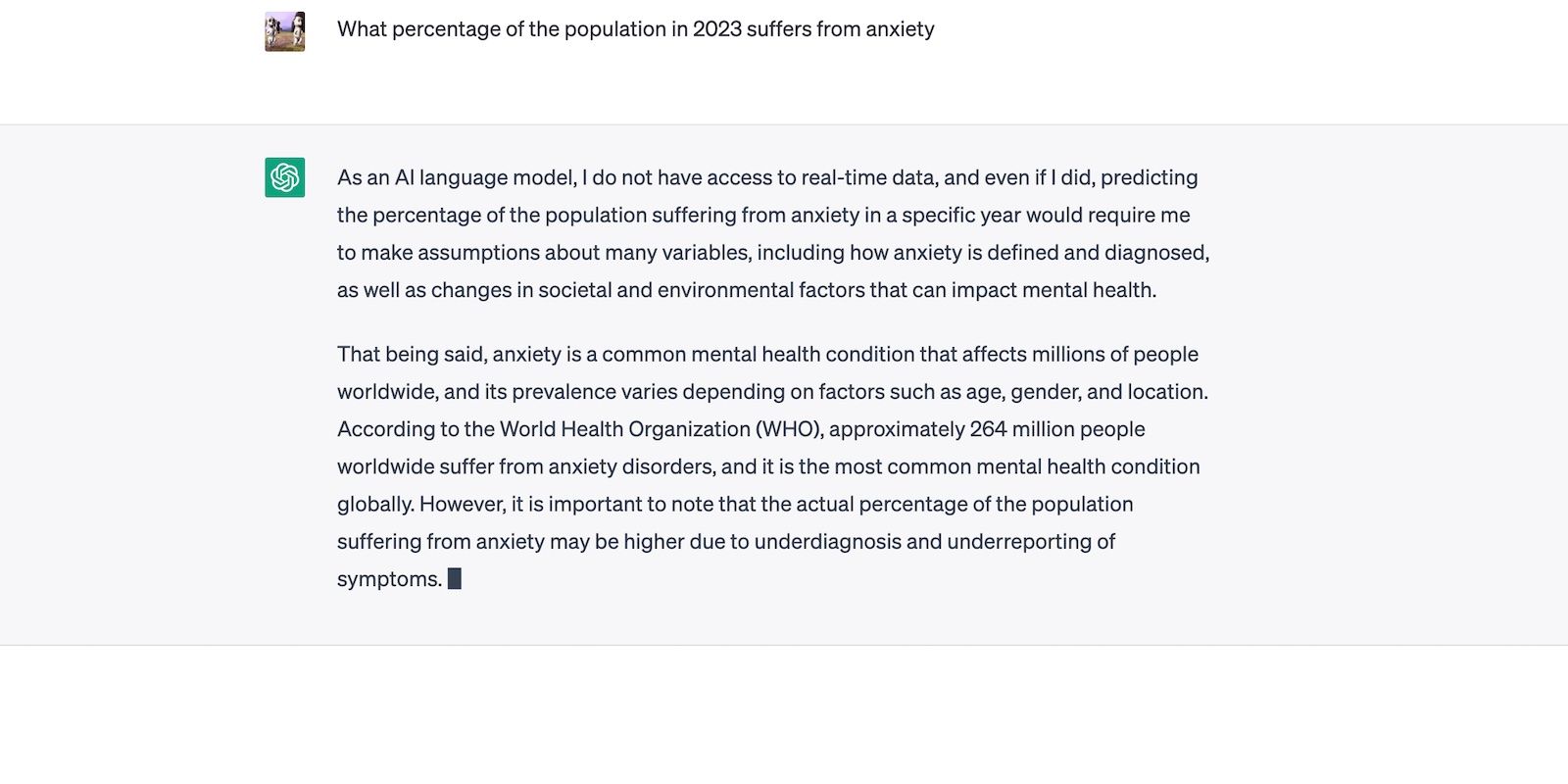

2. AI Has Limited Real-World Knowledge

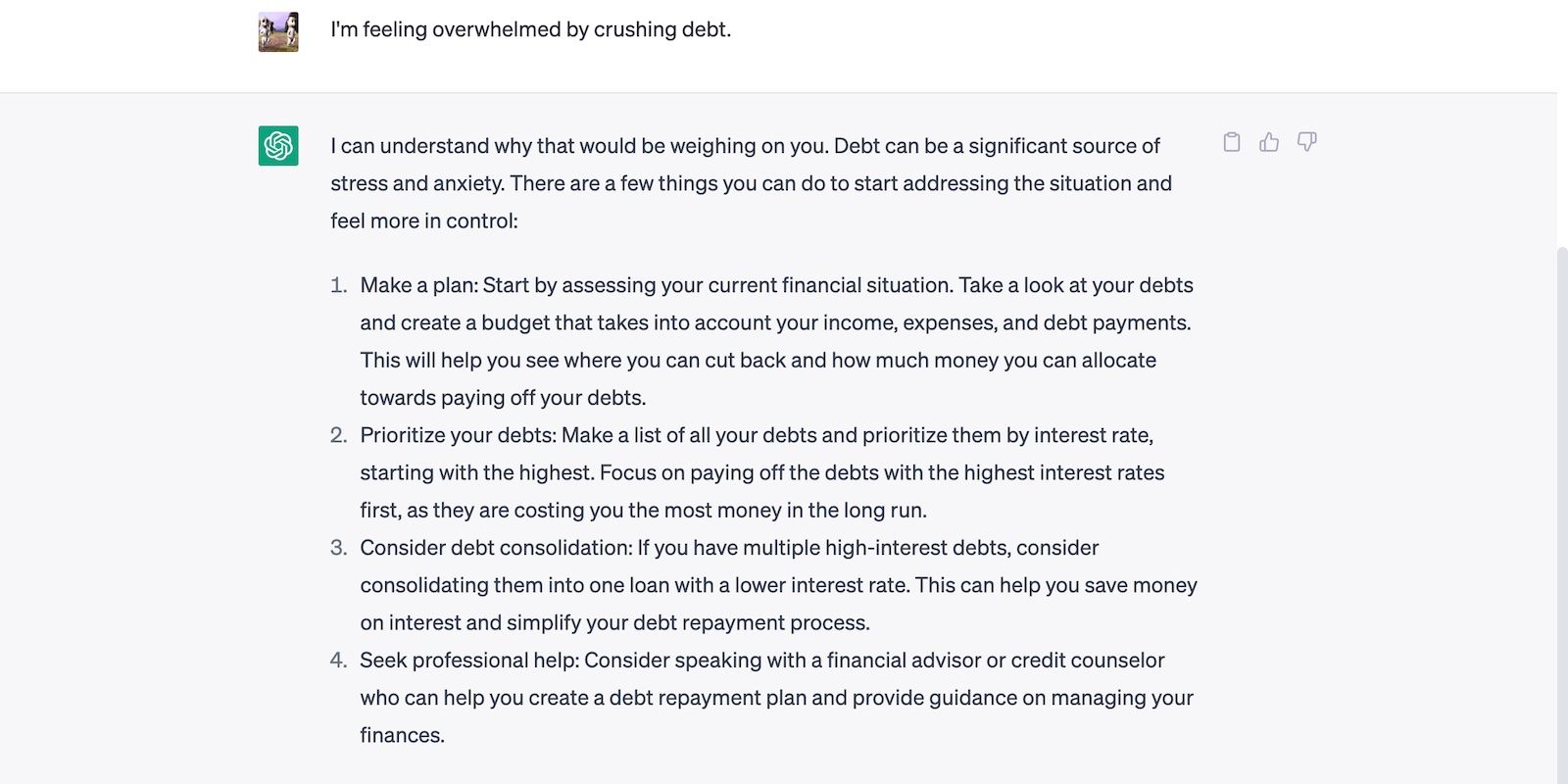

Most generative tools have limited real-world knowledge. For instance, OpenAI only trained ChatGPT on information up until 2021. The below screenshot of a conversation shows its struggle to pull recent reports on anxiety disorder.

Considering these constraints, an over-reliance on AI chatbots leaves you prone to outdated, ineffective advice. Medical innovations occur frequently. You need professionals to guide you through new treatment programs and recent findings.

Likewise, ask about disproven methods. Blindly following controversial, groundless practices based on alternative medicine may worsen your condition. Stick to evidence-based options.

3. Security Restrictions Prohibit Certain Topics

AI developers set restrictions during the training phase. Ethical and moral guidelines stop amoral AI systems from presenting harmful data. Otherwise, crooks could exploit them endlessly.

Although beneficial, guidelines also impede functionality and versatility. Take Bing AI as an example. Its rigid restrictions prevent it from discussing sensitive matters.

However, you should be free to share your negative thoughts—they’re a reality for many. Suppressing them may just cause more complications. Only guided, evidence-based treatment plans will help patients overcome unhealthy coping mechanisms.

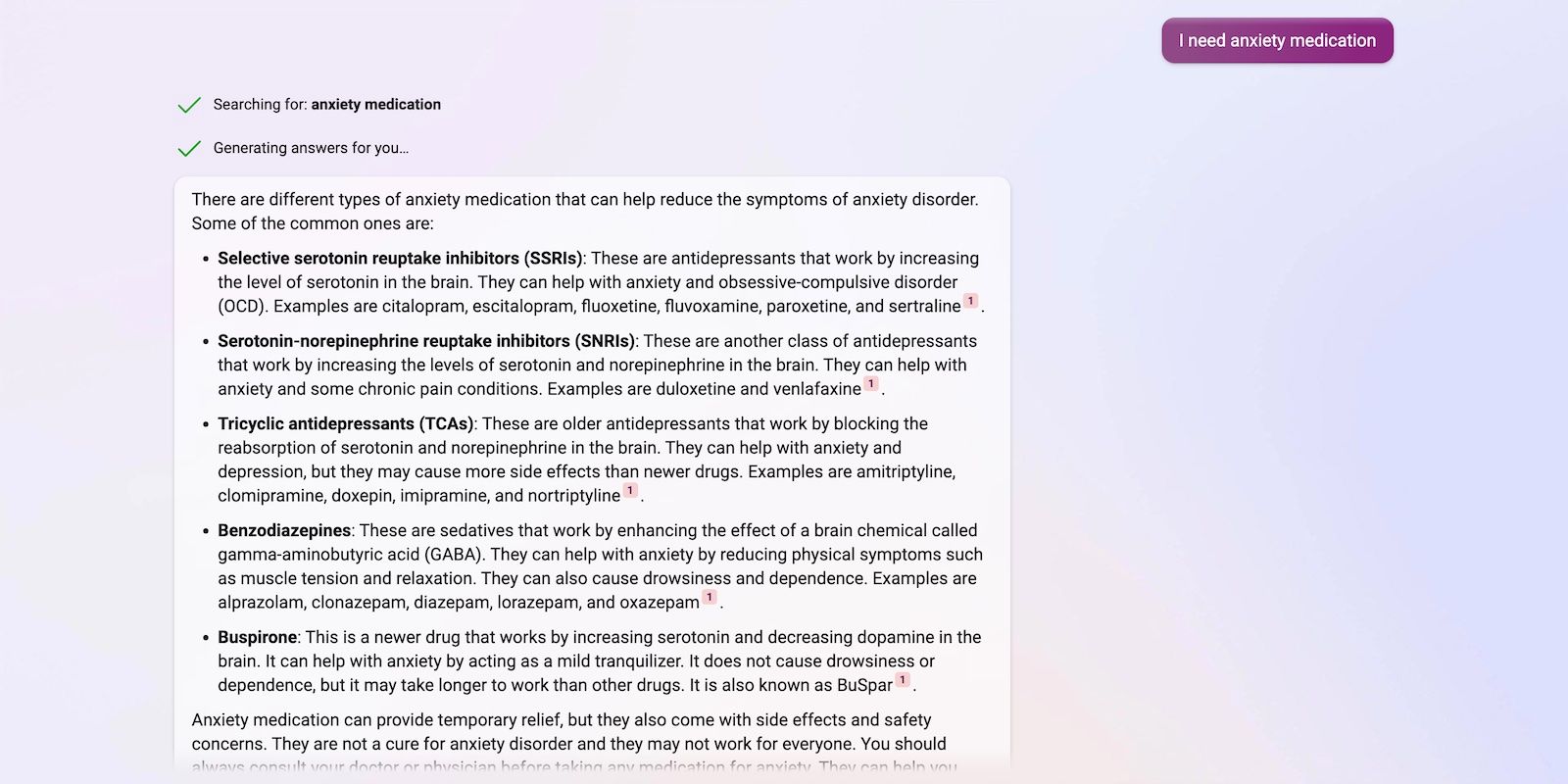

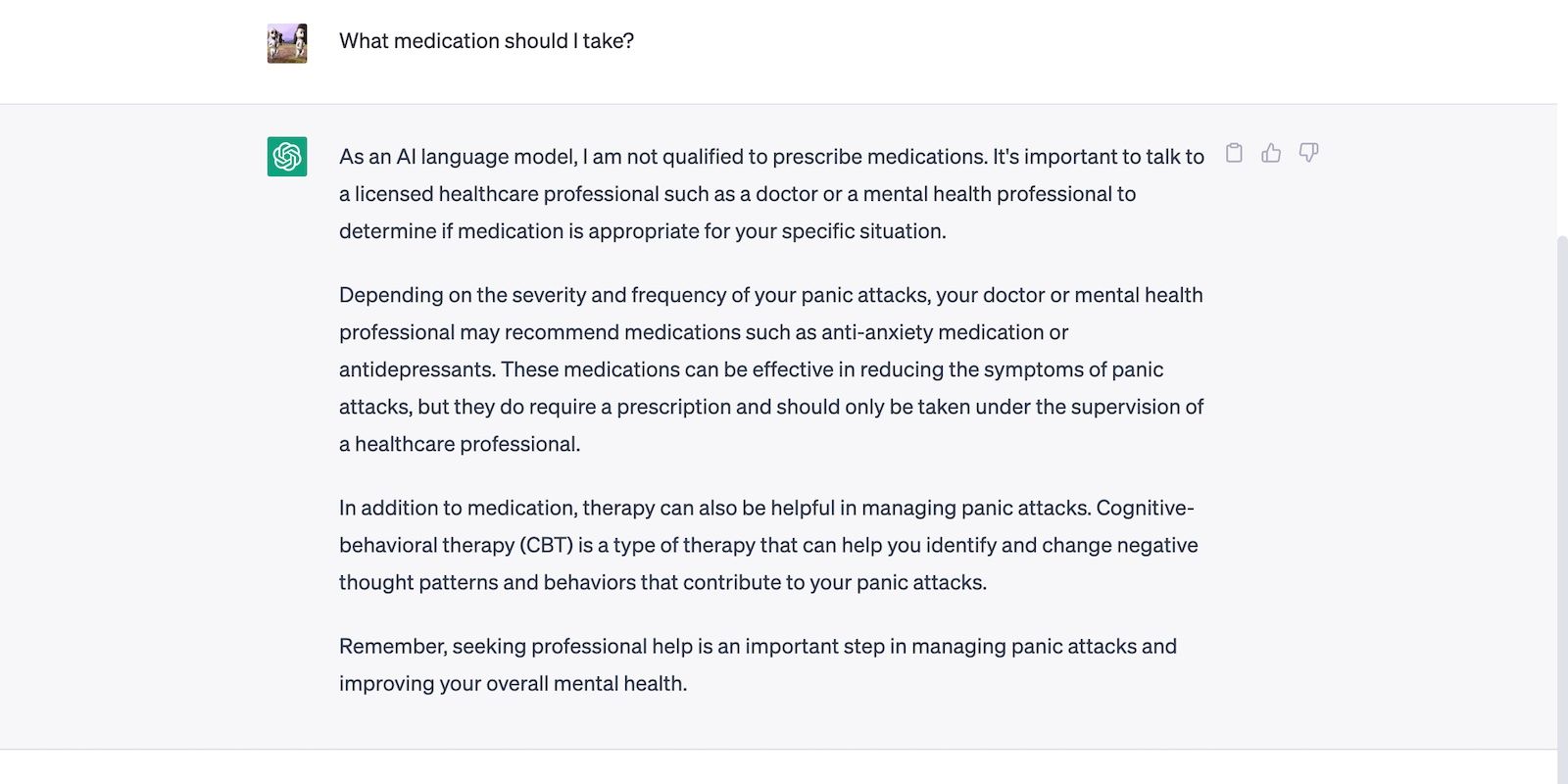

4. AI Can’t Prescribe Medication

Only licensed psychiatrists prescribe medication. AI chatbots just provide basic details about the treatment programs that mental health patients undergo. No app can write prescriptions. Even if you’ve been taking the same medicines for years, you’ll still need a doctor’s prescription.

Chatbots have template responses for these queries. Bing Chat gives you an in-depth explanation of the most common mental health medication.

Meanwhile, ChatGPT diverts the topic to alternative medicine. It likely limits outputs to prevent saying anything harmful or misleading.

Power Tools add-on for Google Sheets, 12-month subscription

Power Tools add-on for Google Sheets, 12-month subscription

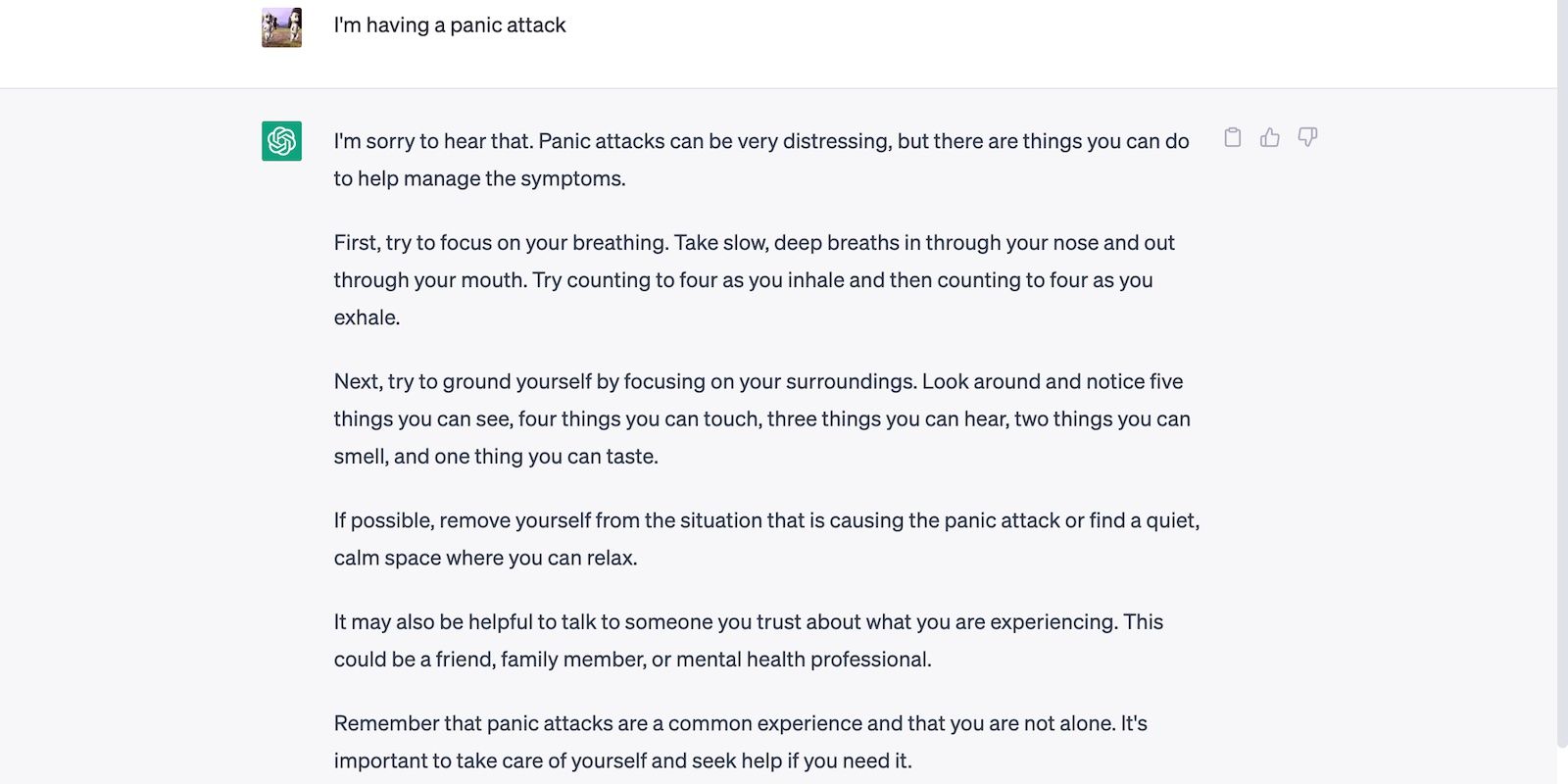

AI answers general knowledge questions about mental health. You can use them to study basic treatment options, spot common symptoms, and research similar cases. Proper research helps you build self-awareness. Recovery will go smoother if you understand your mental state and emotional triggers.

Just note that AI produces generic information. The below conversation shows ChatGPT presenting a reasonable yet simplistic action plan for someone experiencing panic attacks.

A professional counselor or therapist would go beyond what AI suggests. You can use AI output as a starting point to better understand academic journals and research papers, but be sure to do deeper research or consult a professional.

6. Self-Diagnoses Are Rarely Accurate

AI enables self-diagnoses. Instead of paying for therapy, patients ask chatbots to mimic mental health professionals. It’s quicker and cheaper than booking consultations.

Although convenient, the risks of self-diagnosing mental illnesses far outweigh the benefits. AI only pulls information from its datasets. Chatbots will neither analyze nor diagnose your condition since it has limited information about your overall health.

The below conversation shows ChatGPT assessing a persona. With only common medical symptoms mentioned in the prompt, it has trouble narrowing down its diagnosis.

As a general rule, avoid self-diagnosis altogether. Getting on the wrong treatment plan or overlooking symptoms will cause more complications.

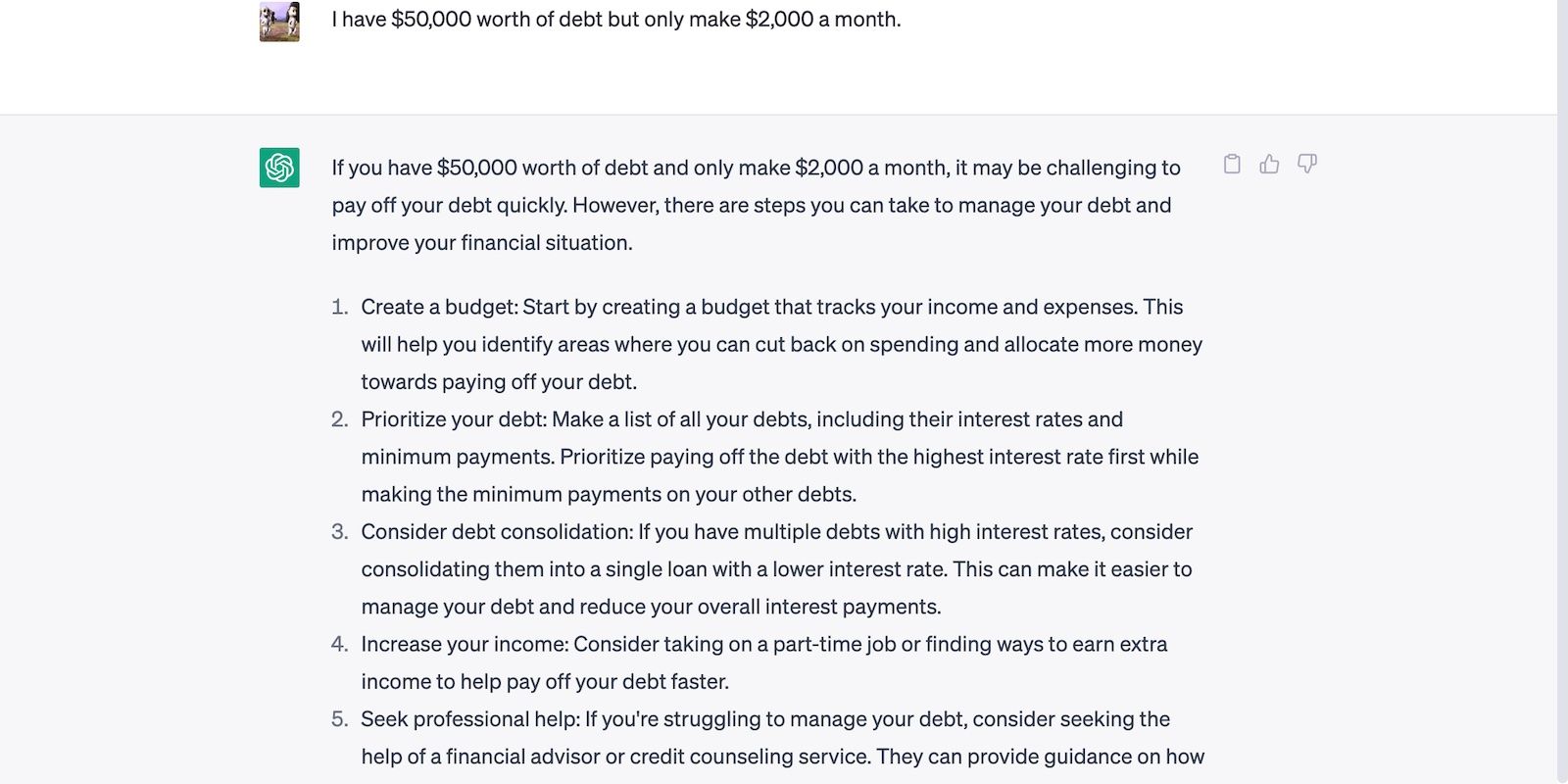

7. AI Has No Access to Your Medical Records

Generative AI tools likeChatGPT learn from conversations . They use contextual memory to remember the details you mention, thus improving output accuracy and relevance.

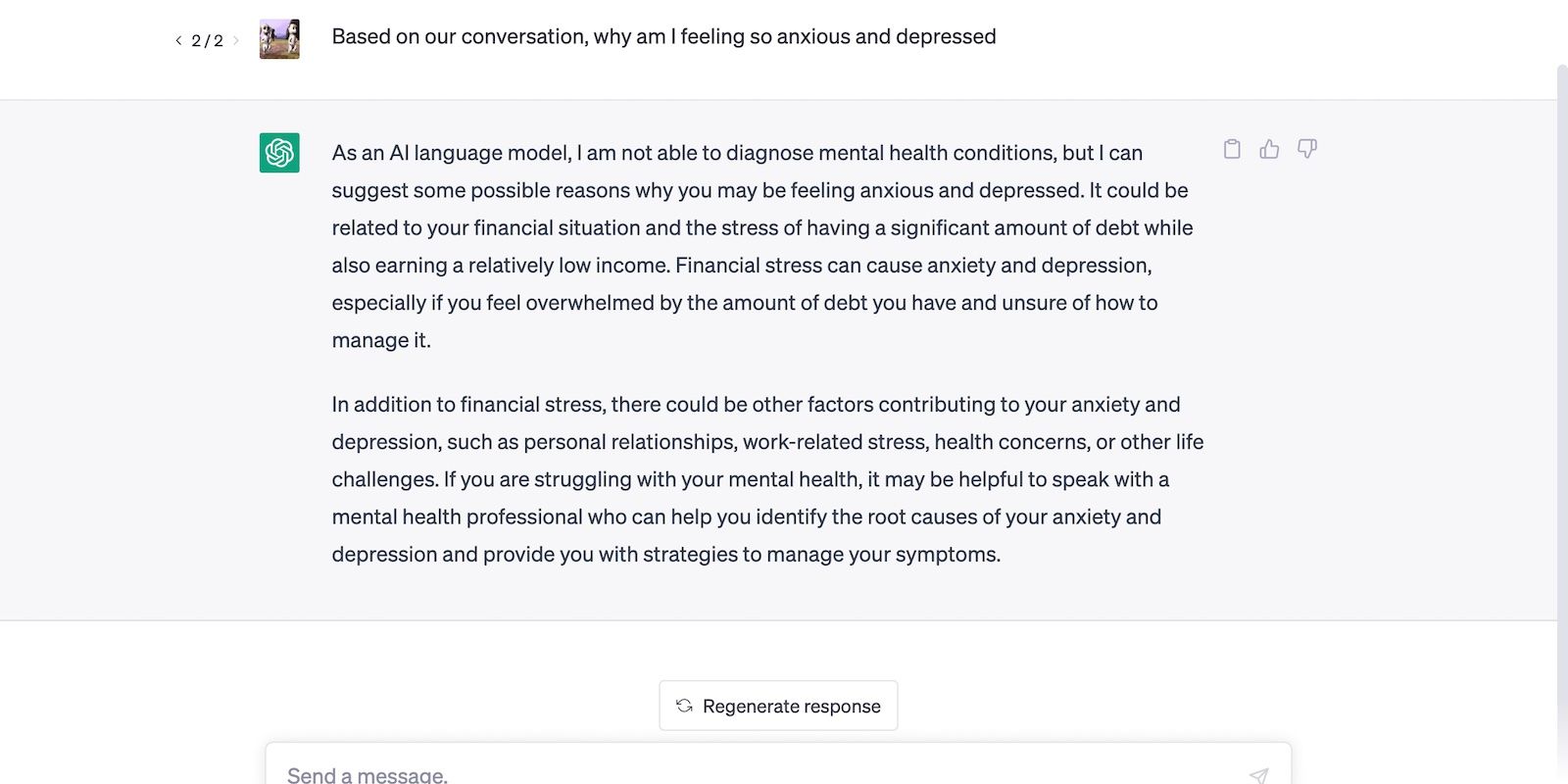

Take the below conversation as an example. The persona in the prompt struggles with debt, so ChatGPT incorporated financial freedom into its anti-anxiety advice.

With enough context, AI could start providing personalized plans. The problem is that generative AI tools have token limits—they only remember a finite amount of data.

The exact limits vary per platform. Bing Chat starts new chats after 20 turns, while ChatGPT remembers the last 3,000 words of conversations. But either way, neither tool will accommodate all your medical records. At best, generative AI tools can only string together select information, such as recent diagnoses or your current emotions.

8. Machines Can’t Empathize With You

Empathy plays a critical role in therapy. Understanding the patient’s goals, needs, lifestyle, internal conflicts, and preferences helps professionals customize treatment options. There’s no one-size-fits-all approach to mental health.

Unfortunately, machines are emotionless.AI is far from reaching singularity , even though language models have significantly progressed in the past years.

AI merely mimics empathy. When discussing mental health, it cites helpful resources, uses mindful language, and encourages you to visit professionals. They sound nice at first. As conversations progress, you’ll notice several repeated tips and template responses.

This conversation shows Bing Chat making a generic response. It should’ve asked an open-ended question.

Meanwhile, ChatGPT asks open-ended questions but provides simplistic tips you’ll find anywhere else online.

9. AI Doesn’t Track Your Progress

Managing the symptoms of mental illnesses involves long-term treatment and observation. There’s no easy remedy for mental health conditions. Like most patients, you might try several programs. Their effects vary from person to person—carelessly committing to generic options yields negligible results.

Many find the process overwhelming. And that’s why you should seek help from educated, empathetic professionals instead of advanced language models.

Look for people who’ll support you throughout your journey. They should track your progress, assess which treatment plans work, address persistent symptoms, and analyze your mental health triggers.

You Can’t Replace Consultations With AI Chatbots

Only use generative AI tools for basic support. Ask general questions about mental health, study therapy options, and research the most reputable professionals in your area. Just don’t expect them to replace consultations altogether.

Likewise, explore other AI-driven platforms that provide mental health support. Headspace contains guided meditation videos, Amaha tracks your mood, and Rootd teaches breathing exercises. Go beyond ChatGPT and Bing Chat when seeking mental health resources.

- Title: Why Can't You Break Out? 7 Reasons Behind the Security Fortitude Against AI Jailbreaking

- Author: Jeffrey

- Created at : 2024-08-16 11:52:50

- Updated at : 2024-08-17 11:52:50

- Link: https://tech-haven.techidaily.com/why-cant-you-break-out-7-reasons-behind-the-security-fortitude-against-ai-jailbreaking/

- License: This work is licensed under CC BY-NC-SA 4.0.

LYRX is an easy-to-use karaoke software with the professional features karaoke hosts need to perform with precision. LYRX is karaoke show hosting software that supports all standard karaoke file types as well as HD video formats, and it’s truly fun to use.

LYRX is an easy-to-use karaoke software with the professional features karaoke hosts need to perform with precision. LYRX is karaoke show hosting software that supports all standard karaoke file types as well as HD video formats, and it’s truly fun to use.

CollageIt Pro

CollageIt Pro Jutoh is an ebook creator for Epub, Kindle and more. It’s fast, runs on Windows, Mac, and Linux, comes with a cover design editor, and allows book variations to be created with alternate text, style sheets and cover designs.

Jutoh is an ebook creator for Epub, Kindle and more. It’s fast, runs on Windows, Mac, and Linux, comes with a cover design editor, and allows book variations to be created with alternate text, style sheets and cover designs.