How Does Google's New Gemini AI Compare to Microsoft's ChatGPT: An In-Depth Analysis?

Evaluating the Battle Between Google Gemini and Microsoft’s ChatGPT in AI Innovation

Quick Links

- What Is Google’s Gemini AI Model?

- How to Use Google Gemini AI

- How Gemini Compares to GPT-3.5 and GPT-4

- Is Gemini Better than ChatGPT?

Key Takeaways

- Google’s AI model Gemini has three variants, Ultra, Pro, and Nano, focused on different tasks and complexity levels.

- Gemini Ultra shows promising results on key AI benchmarks, but, as of December 2023, not available for public use. Google says it should be available in January of 2024.

- Gemini Pro is available and while it performs quite well, it presently fails to dethrone GPT-4.

Google has consistently promised that its Gemini AI model would be better than OpenAI’s GPT-4, the model that powers ChatGPT Plus. Now that Google Gemini has launched, we can finally put it to the test and see how Gemini compares to GPT-4.

When Google launched Bard in March 2023, there were many reasons to be excited. Finally, OpenAI’s ChatGPT monopoly would be broken, and we’d get worthy competition.

But Bard was never the AI titan people hoped for, and GPT-4 remains the dominant generative AI chat bot platform. Now, Google’s Gemini is here—but is the long-awaited AI model better than ChatGPT?

What Is Google’s Gemini AI Model?

Gemini is Google’s most capable generative AI model , able to understand and operate across different data formats, including text, audio, image, and video. It is Google’s attempt to create a unified AI model drawing capabilities from its most capable AI technologies. Gemini will be available in three variants:

- Gemini Ultra: The largest and most capable variant designed to handle highly complex tasks.

- Gemini Pro: The best model for scaling and delivering high performance across a wide range of tasks, but less capable than Ultra.

- Gemini Nano: The most efficient model designed for on-device task deployment. For example, developers can use Gemini Nano to build mobile apps or integrated systems, bringing powerful AI into the mobile space.

On its official blog,The Keyword , Google says Gemini Ultra outperforms the state-of-the-art in several benchmarks. Google claims Gemini Ultra beats the industry-leading GPT-4 in several key benchmarks.

With an unprecedented 90.0% score on the rigorous MMLU benchmark, Google says Gemini Ultra is the first model to surpass human-level performance on this multifaceted test spanning 57 subjects.

Gemini Ultra can also understand, explain, and generate high-quality code in some of the world’s most popular programming languages, including Go, JavaScript, Python, Java, and C++. On paper, these are all great results. But these are all benchmarks, and benchmarks do not always tell the whole story. So, how well does Gemini perform in real-world tasks?

How to Use Google Gemini AI

Of the three variants of the Gemini AI model, you can start using Gemini Pro right now. Gemini Pro is currently available on Google’s Bard chatbot. To use Gemini Pro with Bard, head tobard.google.com and sign in with your Google account.

Google says that Gemini Ultra will roll out in January 2024, so we’ve had to settle for testing Gemini Pro against ChatGPT for now.

How Gemini Compares to GPT-3.5 and GPT-4

When any new AI model is launched, it is tested against OpenAI’s GPT AI models, which are generally accepted as the state-of-the-art model other models should be held up against. So, using Bard and ChatGPT, we tested Gemini’s ability in math, creative writing, code generation, and accurately processing image inputs.

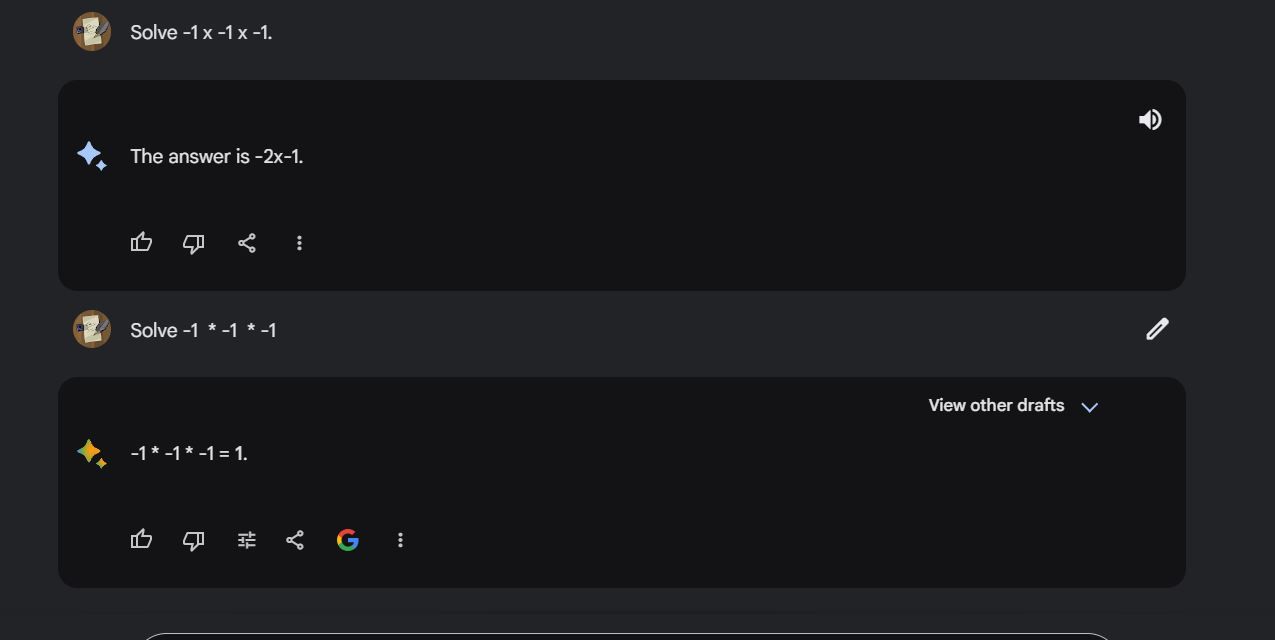

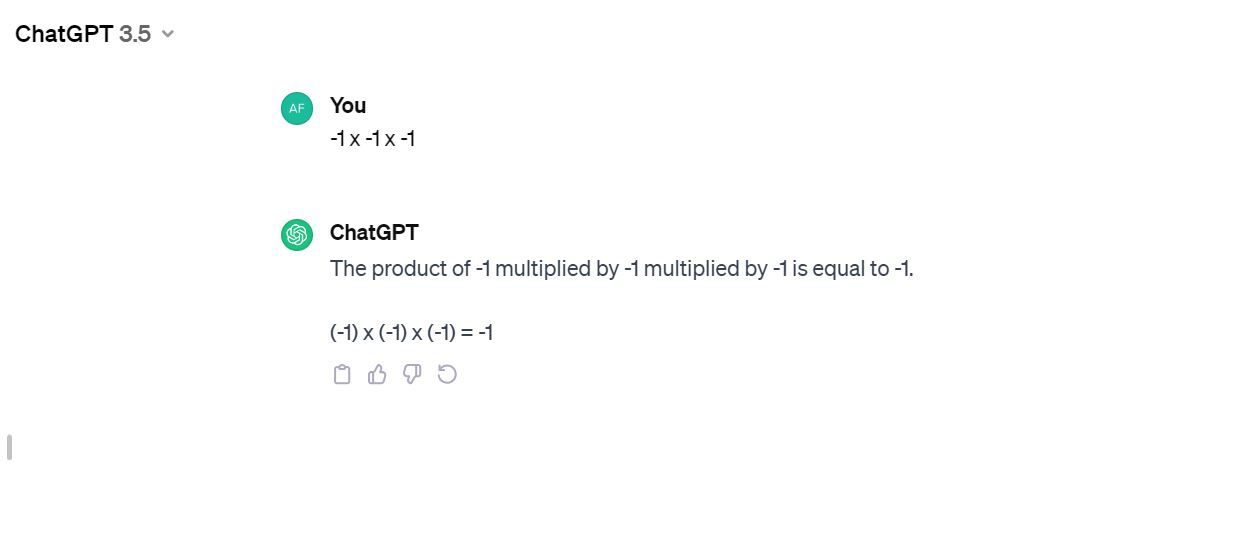

Starting with the easiest math question we could think of, we asked both chatbots to solve:-1 x -1 x -1 .

Bard went first. We repeated the question twice, all coming back with wrong answers. We did get the answer on the third attempt, but that doesn’t count.

We tried ChatGPT running on GPT-3.5. The first trial got it right.

To test Gemini’s image interpretation abilities, we tasked it with interpreting some popular memes. It declined, saying it can’t interpret images with people in it. ChatGPT, running GPT-4V, was willing and able to do so flawlessly.

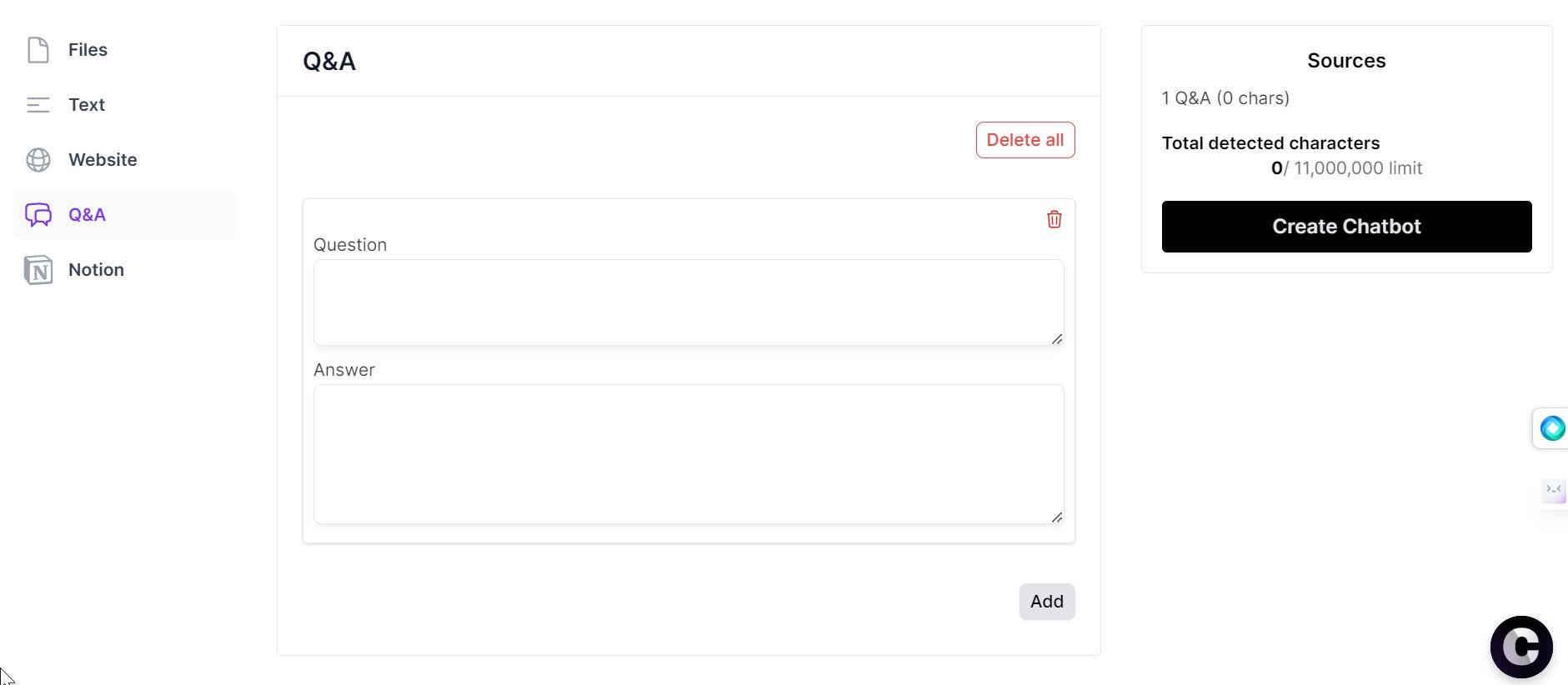

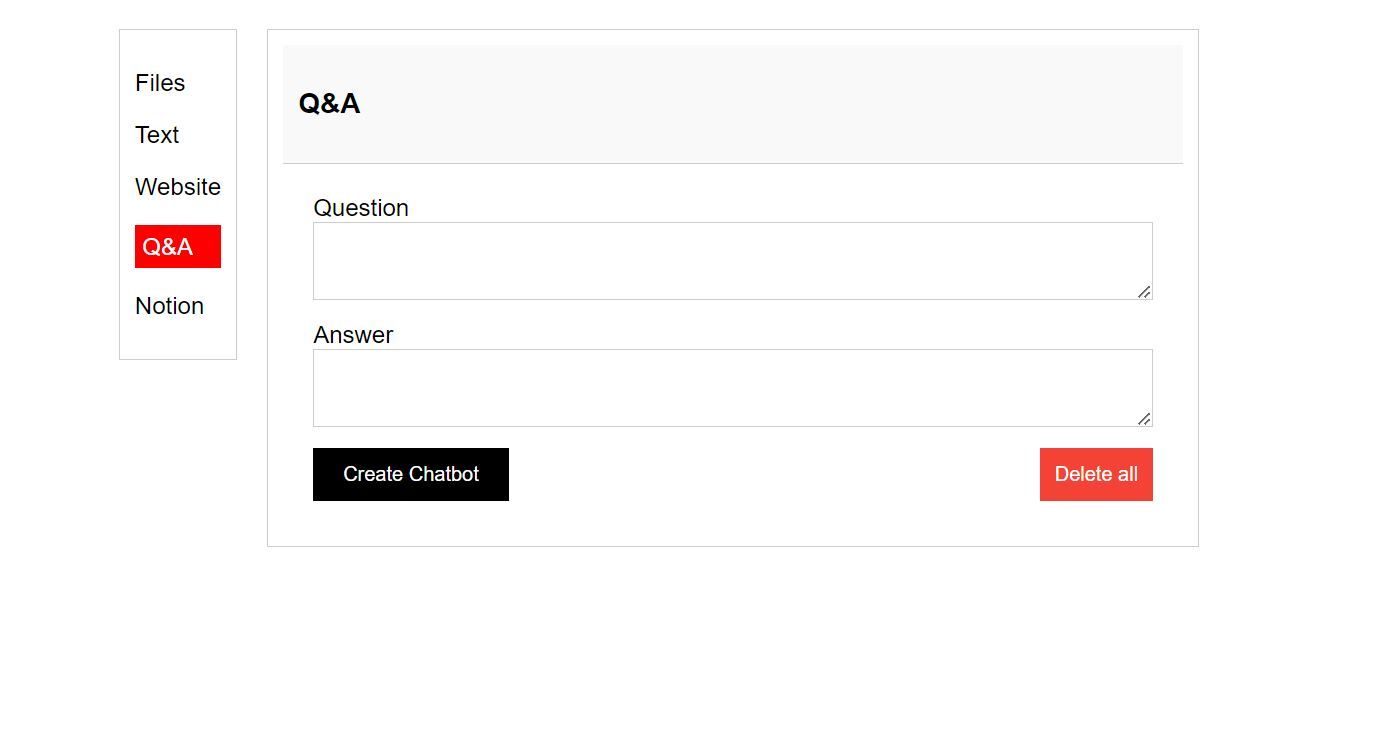

We tried another attempt at making it interpret an image while testing its problem-solving and coding ability. We gave Bard, running Gemini Pro, a screenshot and asked it to interpret and write HTML and CSS code to replicate the screenshot.

Here’s the source screenshot.

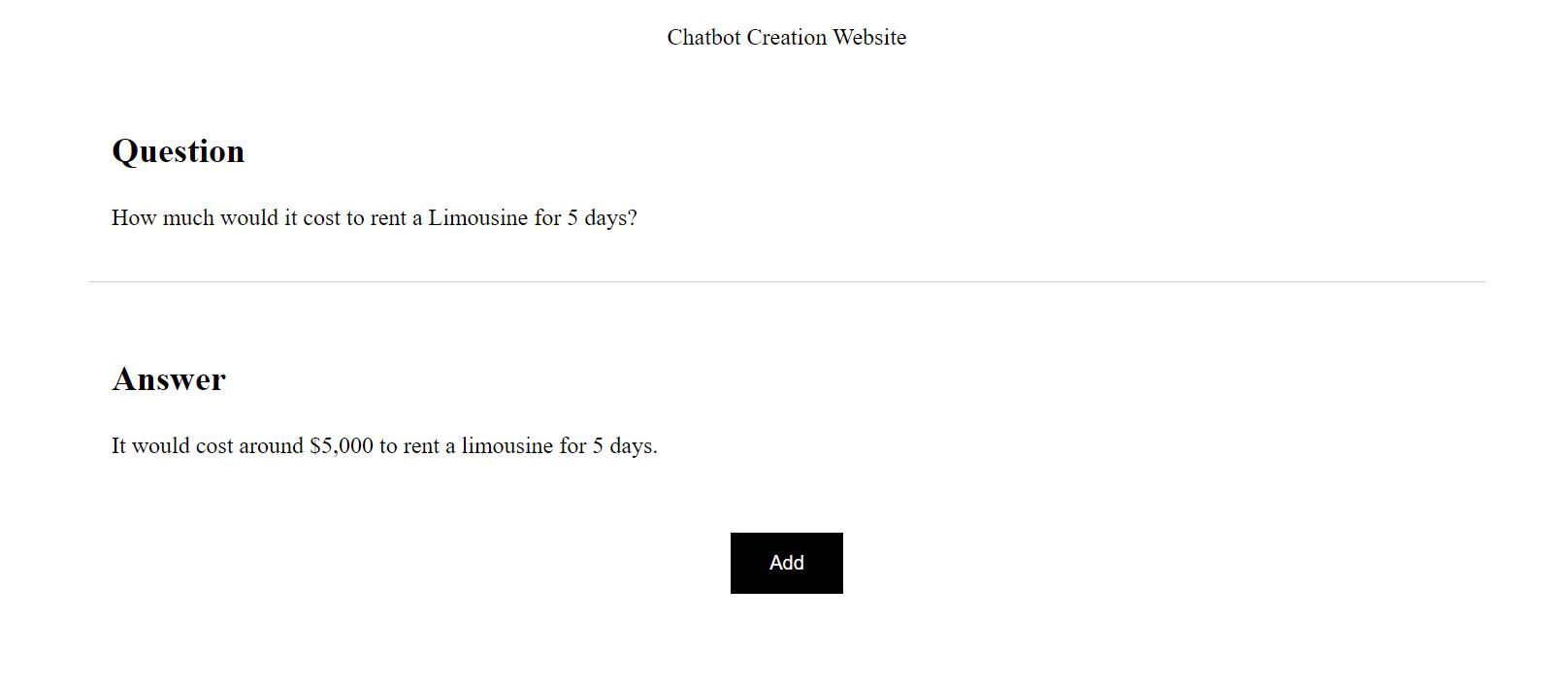

Below is Gemini Pro’s attempt to interpret and replicate the screenshot using HTML and CSS.

And here’s GPT-4’s attempt at replicating the screenshot. The result is not surprising, considering GPT-4 has historically been strong at coding. We’ve previously demonstratedusing GPT-4 to build a web app from scratch .

We asked Gemini Pro to create a poem about Tesla (the electric vehicle car brand). It showed marginal improvements from previous tests we’ve done in the past. Here’s the result:

At this point, we thought comparing the results against GPT-3.5 rather than the supercharged GPT-4 would be more appropriate. So, we asked ChatGPT running GPT-3.5 to create a similar poem.

It may be a personal choice, but Gemini Pro’s take on this seems better. But we’ll let you be the judge.

Is Gemini Better than ChatGPT?

Before Google launched Bard, we thought it’d be the ChatGPT competition we had been waiting for—it wasn’t. Now, Gemini is here, and so far, Gemini Pro doesn’t seem like the model to give ChatGPT the knockout punch.

Google says Gemini Ultra is going to be much better. We truly hope it is, and that it meets or exceeds the claims made in the Gemini Ultra announcement. But until we see and test the best version of Google’s generative AI tool, we won’t know if it can unseat other AI model competitors. As it stands, GPT-4 remains the undisputed AI model champion.

Also read:

- [New] In 2024, Best Video Makers with Music and Photos

- [New] Scouring for Meaningful YouTube Discussions

- [Updated] In 2024, Expedient Techniques to Spot and Expel Deceptive Insta Connections

- Effective Solutions for Continuous Glitches While Playing World of Tanks Blitz

- How to Ensure Authenticity of ChatGPT Apps Before Download on iOS Devices

- How To Implement ChatGPT Widget For Android Devices - A Step-by-Step Guide

- In 2024, Unlock More Views with These TikTok Text Tools of 2023

- IPhone's Easy Guide Merge Music and Video Without Spending a Dime

- Italy's Sudden Ban on ChatGPT: Understanding the Reason Behind 'Immediate Action'

- Leading the Charge for AI Responsibility - CEO's Perspective

- Local AI Fun Without the Fuss: Get Started With a Free, Open-Source ChatGPT Copy - GPT-4All for Windows Users!

- Mastering ChatGPT Interaction: Read, Digest, and Summarize PDFs with Ease

- Navigate Past Fixed Context Menus with These Remedies

- The Art of Background Elimination in Graphic Designs for 2024

- Title: How Does Google's New Gemini AI Compare to Microsoft's ChatGPT: An In-Depth Analysis?

- Author: Jeffrey

- Created at : 2025-01-23 16:04:32

- Updated at : 2025-01-25 17:56:22

- Link: https://tech-haven.techidaily.com/how-does-googles-new-gemini-ai-compare-to-microsofts-chatgpt-an-in-depth-analysis/

- License: This work is licensed under CC BY-NC-SA 4.0.