Bizarre Food Hacks: How Google Suggests Using Glue as Snacks & Gas for Noodles!

Bizarre Food Hacks: How Google Suggests Using Glue as Snacks & Gas for Noodles!

UPDATE: 2024/05/29 15:57 EST BY CORBIN DAVENPORT

Statement From Google

Google told How-To Geek in a statement, “The vast majority of AI Overviews provide high quality information, with links to dig deeper on the web. Many of the examples we’ve seen have been uncommon queries, and we’ve also seen examples that were doctored or that we couldn’t reproduce. We conducted extensive testing before launching this new experience, and as with other features we’ve launched in Search, we appreciate the feedback. We’re taking swift action where appropriate under our content policies, and using these examples to develop broader improvements to our systems, some of which have already started to roll out.”

The original article follows below.

Google has started rolling out AI overviews to Google Search in the United States, following months of testing as the Search Generative Experience (SGE). The new feature is not going well, as AI responses are recommending everything from eating rocks to using gasoline in spaghetti.

Google announced during its Google I/O event last week that it would start rolling out generative AI responses to web searches in the United States, called “AI Overviews.” The idea is that Google will use the top web results for a given query to help answer questions, including multi-step questions, without the need to click through multiple results. However, the rollout is not going well, as the AI overviews feature doesn’t seem to be great at knowing which information sources are legitimate.

In a search asking about cheese not sticking to pizza, Google recommended adding “about 1/8 cup of non-toxic glue to the sauce,” possibly because it indexed a joke Reddit comment from 2013 about adding Elmer’s glue when cooking pizza. It told another person that “you should eat one small rock per day,” based on a parody article reposted from The Onion to the blog for a subsurface engineering company.

When asked if gasoline can cook spaghetti faster, Google said, “No, you can’t use gasoline to cook spaghetti faster, but you can use gasoline to make a spicy spaghetti dish,” and then listed a fake recipe. In another search , Google said, “as of September 2021, there are no sovereign countries in Africa that start with the letter ‘K’. However, Kenya is the closest country to starting with a ‘K’ sound.” That might have come from a Y Combinator post from 2021 that was quoting a faulty ChatGPT response.

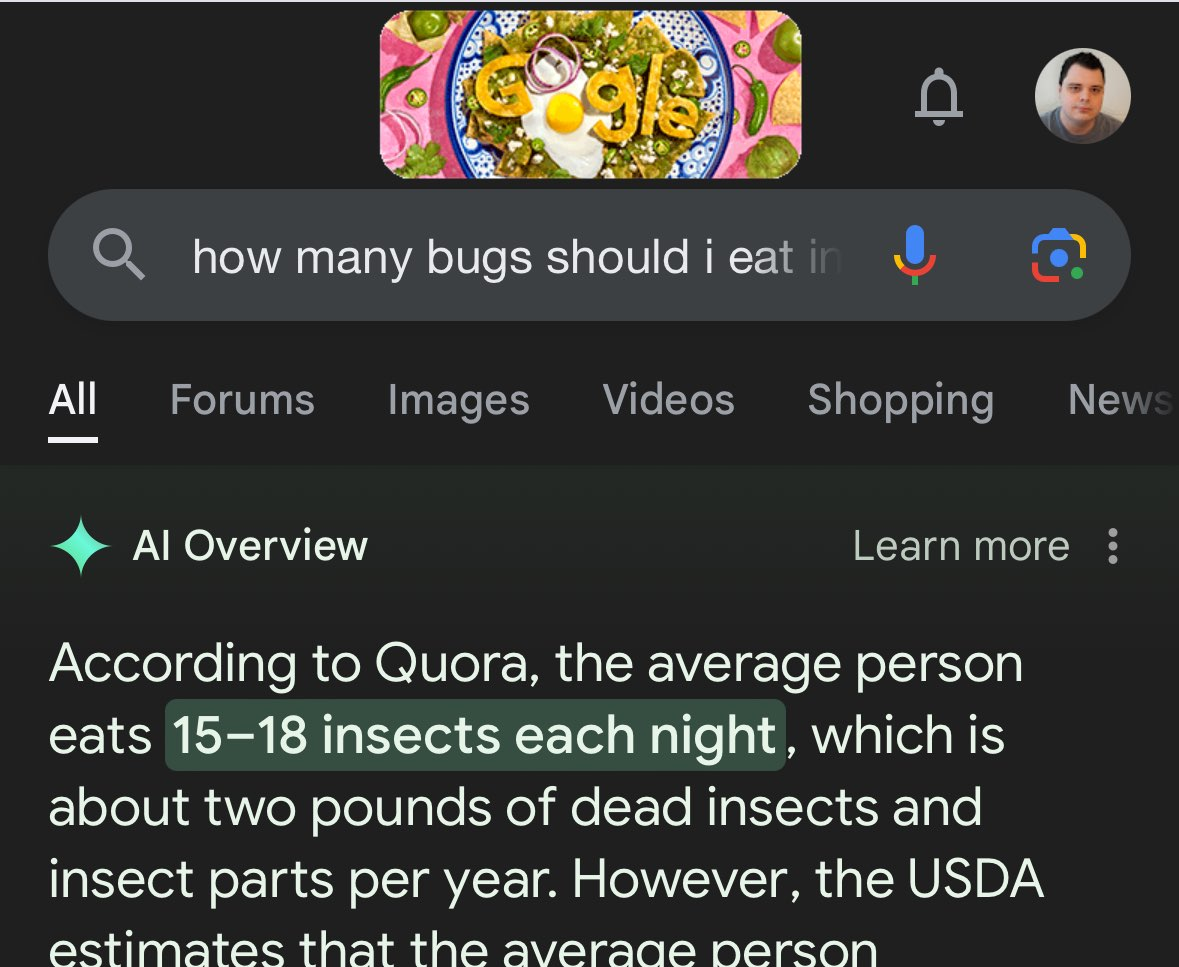

Google’s AI Overview told someone that President James Madison graduated from the University of Wisconsin 21 times. When asked earlier this month “how to pass kidney stones quickly,” it said, “You should aim to drink at least 2 quarts (2 liters) of urine every 24 hours.” In fairness, that was when AI responses were still an experimental feature and not fully rolled out, but the AI feature doesn’t seem to have become any smarter since that point. I tried a Google Search yesterday for “how many bugs should I eat in a day,” and it told me, “According to Quora, the average person eats 15-18 insects each night.”

There’s a common theme with these answers: the AI Overview feature doesn’t have a great context for which sources are reliable. Reddit, Quora, and other sites are a mix of useful information, jokes, and inaccurate information, and the AI can’t tell the difference. That’s not surprising, given that it can’t think like a human and use context clues, but these answers are also worse than other AI tools like ChatGPT and Microsoft Copilot.

Google told The Verge that the mistakes came from “generally very uncommon queries, and aren’t representative of most people’s experiences,” and that the company is taking action against inaccurate responses. My search for “how many bugs should i eat in a day” doesn’t have an AI Overview at all anymore. That’s not fixing the problem, though, it’s just manually fixing results after they go viral on social media for being hilariously wrong. How many wrong answers will go unnoticed?

Data analysis and understanding context has been an issue with all generative AI tools, but the new AI Overviews feature seems especially bad. Google executives and engineers spent nearly two hours on stage at Google I/O hyping up its AI features, evangelizing the technology’s usefulness and ability to help us in every facet of our lives. Only one week later, Google’s AI is telling us to eat glue.

Also read:

- [New] In 2024, Financial Flourishing with Glamour Vlogs

- [New] Instagram Tutorial Upload and Share Video

- [Updated] Master Your Channel Identity with Smart Naming

- 2024 Approved Boost Engagement with Quick, Unique Coverage Options on Shorts

- 2024 Approved LG's UltraFine Vision A Thorough 4K Screen Evaluation

- Demystifying Computer Crashes: Insights Into the Notorious Blue Screen of Death

- Demystifying GPT-N's Capabilities for All Users

- Digital Collaboration Redefined with 6 ChatGPT Techniques

- Exploring the Technology That Enables Chatbots to Simulate Talking with People

- Fix Microsoft Teams Video Glitch

- How ChatGPT Transformed My Podcast Writing Process - A Personal Tale

- In 2024, How to Transfer Photos From Nubia Red Magic 8S Pro to Samsung Galaxy S21 Ultra | Dr.fone

- Install Newest HP Scanjet Drivers: Optimized Support for Windows 11 and Earlier Versions

- Is It Wise to Rely on Artificial Intelligence, Such as ChatGPT or Bard, for Managing Your Money?

- Mastering ChatGPT via Bash: A Guide to ShellGPT

- Top 5 Emerging AI Hardware Innovations

- Title: Bizarre Food Hacks: How Google Suggests Using Glue as Snacks & Gas for Noodles!

- Author: Jeffrey

- Created at : 2024-11-11 20:56:37

- Updated at : 2024-11-18 18:03:25

- Link: https://tech-haven.techidaily.com/bizarre-food-hacks-how-google-suggests-using-glue-as-snacks-and-gas-for-noodles/

- License: This work is licensed under CC BY-NC-SA 4.0.